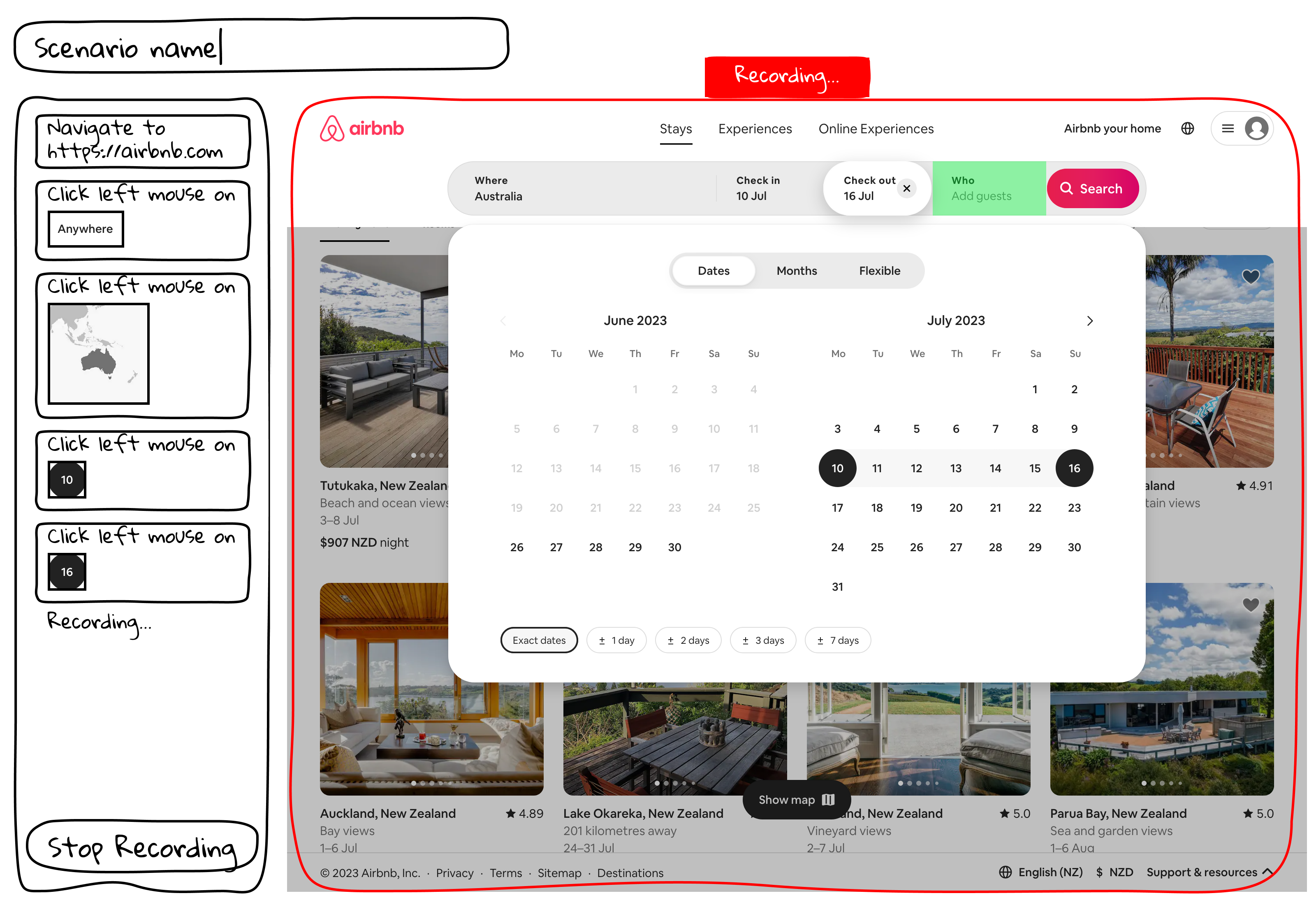

Recording Mode

Problem

Rainforest has many ways to organise tests, to meet the needs of teams that work with fairly mature sotware.

Features: a collection of tests that covers a specific function or workflow.

Example: shopping cart, login and registration, user management.

Run Groups: a collection of tests that are meant to run at the same cadence.

Example: smoke suite, regression suite, nightly build.

Tags: a collection of tests that share the same arbitrary characteristics.

Example: in review, critical, mobile.

Saved Filters: a collection of tests that meet more than one advanced criteria.

Example: tests which were last edited by me, tagged critical, and failed.

While these rich organisational features are necessary in mature software, the leadership team posed a challenge: is there a market opportunity in early-stage software companies, and is there an ideal user experience that would make them happy and rapidly expand Rainforest’s existing customer base?

A small skunk works was assembled: a Product Manager and a Designer (me), tasked to work directly with the CEO to figure it all out with technical input from Engineering Leads.

Design Process

Rainforest had a large number of existing customers that weren’t so active, or were inactive. We combed through that list, had a look at their website, and emailed their founders.

The marketing front page also had a large number of visitors, and we put up a banner that says “We’re working on something new. Want in?”

However they came, we asked one question: “what’s your biggest pain point when it comes to QA?”, and scheduled 1:1 meetings to listen to what they had to say. The fact that we were spread across timezones was an advantage: meetings could be planned with founders from many countries.

We worked mainly across two documents:

- Notes and verbatim quotes from each interview

- A FigJam whiteboard for analysis and putting frameworks together

Early on, we set up near-daily meetings to share observations and brainstorm hypothesis as close to the interview session as possible. As ideas and mockups started to take shape, we engaged Engineering Leads so technical research could happen as early as possible.

Challenges

Many founders we talked to at an early stage wanted to get their product to something that can be demoed and proven in the market as quickly as they could – optimising for maximum velocity rather than robustness – or pivot to something else. At this point, app testing happened by hand.

As most features landed, their product started gaining traction – landing a few contracts in the process. Suddenly, the software couldn’t afford breakages. Breakages would’ve led to loss of trust. Loss of trust would’ve led to churn – something catastrophic without a large customer base.

Breakthrough

In this critical intersection of increasing featureset and decreasing tolerance for mistakes, testing by hand became unmanageable. Even so, founders still saw the risk of replacing it with automated tests: the process requires time investment, and it will rapidly become out of date any time the visuals changed.

We realised that there was an opportunity to build a product laser-focused to solve this problem.

Minimum Viable Integration

We didn’t wait until we had anything playable. As soon as we finished a set of lo-fi mockups, we jumped on a call with our founders to understand their use case and show them what we’re working on.

As mockups became prototypes, and prototypes became an alpha product, we repeated this cycle of interview–show–listen.

When our Engineering Team had built a reasonably hi-fi pre-alpha for internal use, we posted bite-sized images and GIFs in a private Slack channel, and invited founders to a personal walkthrough. Although not everything was playable in this internal build, this was the quickest way to get feedback.

The spirit of “do what’s viable now” meant that we continued keeping a very personal (and very manual) engagement with each founder, post-launch. We regularly inspected each account, helped them identify issues and work through fixes – sometimes through calls – and regularly posed questions to the cohort to get a sense of feature priority.

Result

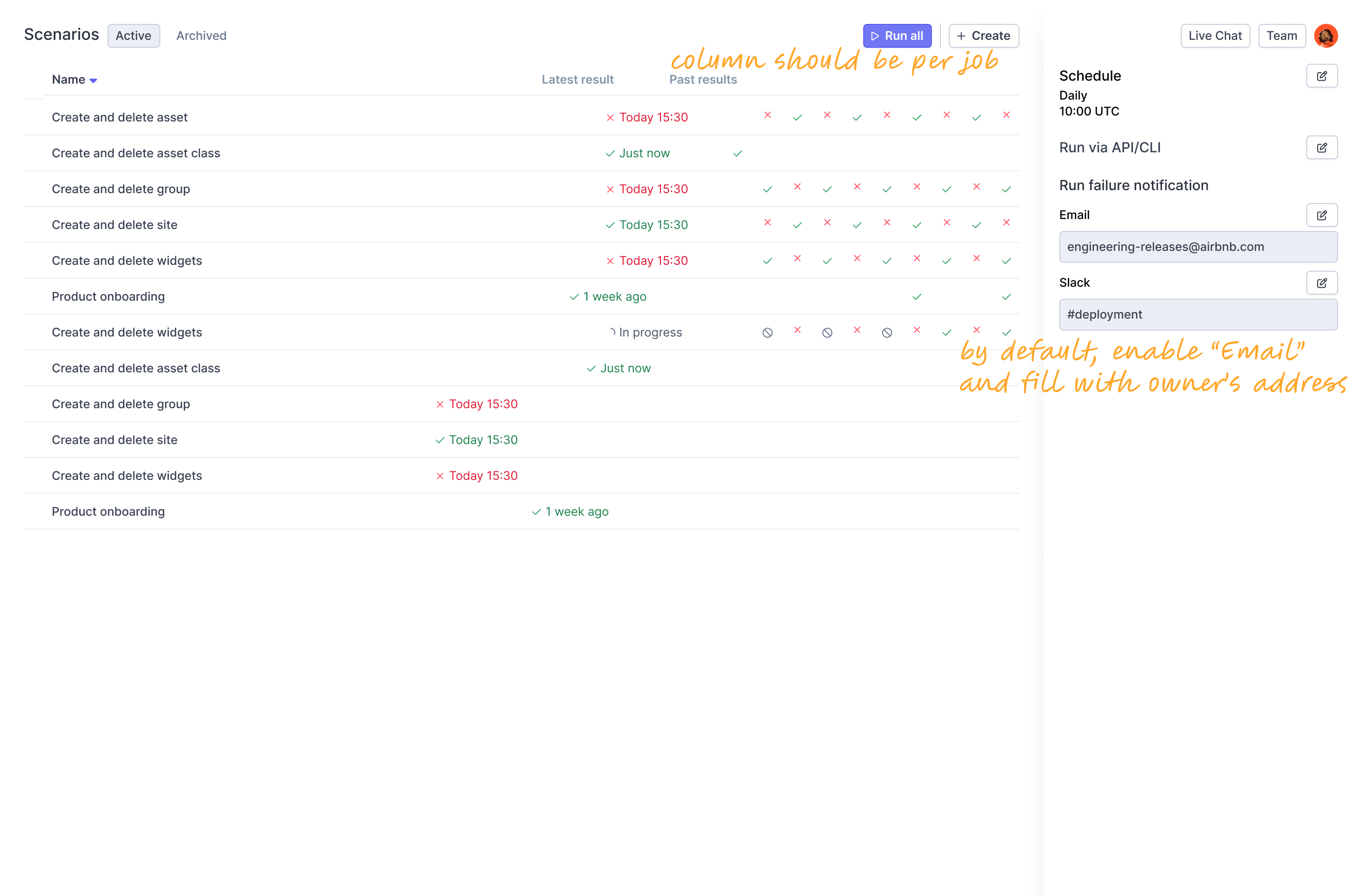

We created a radically slimmed down Rainforest experience, focused on doing a few key tasks really well:

- Create tests only for critical paths that can’t afford to break

- Write tests by naturally interacting with software, not by learning a UI

- Run tests as often as desired, with a flat fee structure

- Receive proactive alerts only when tests break

- Edit tests by re-recording interactions – minimising test maintenance

Launching Recording Mode and a highly tailored experience proved to the company that a small team, going all-in, could kickstart and launch a zero-to-one product in a relatively short period of time.

Rainforest took this to heart when it decided to focus all product squads – previously spread across different facets of the product – to work on the same initiatives: AI Block, then AI Recovery Mode.