AI Block

Idea

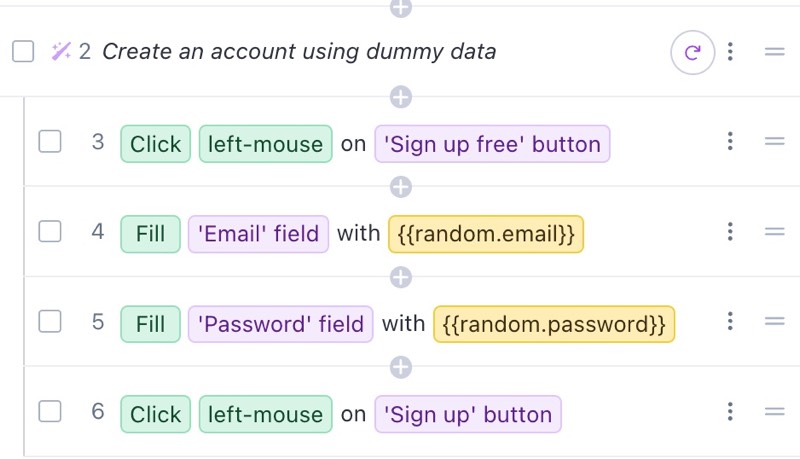

Before AI Block, you’d create a Rainforest test by manually adding a step, then selecting an action (like Click, Type, and Hover) and defining a target (screenshots of UI elements such as buttons and fields).

This process takes too long and has too many footguns. What if you can simply tell Rainforest what you want to do, have it understand what you meant, and build your entire test suite using plain English words that you and your team can understand?

Design Process

I developed a new set of concepts every day, posted them on our Slack, and regularly reviewed in-person with PMs, lead engineers, and other stakeholders.

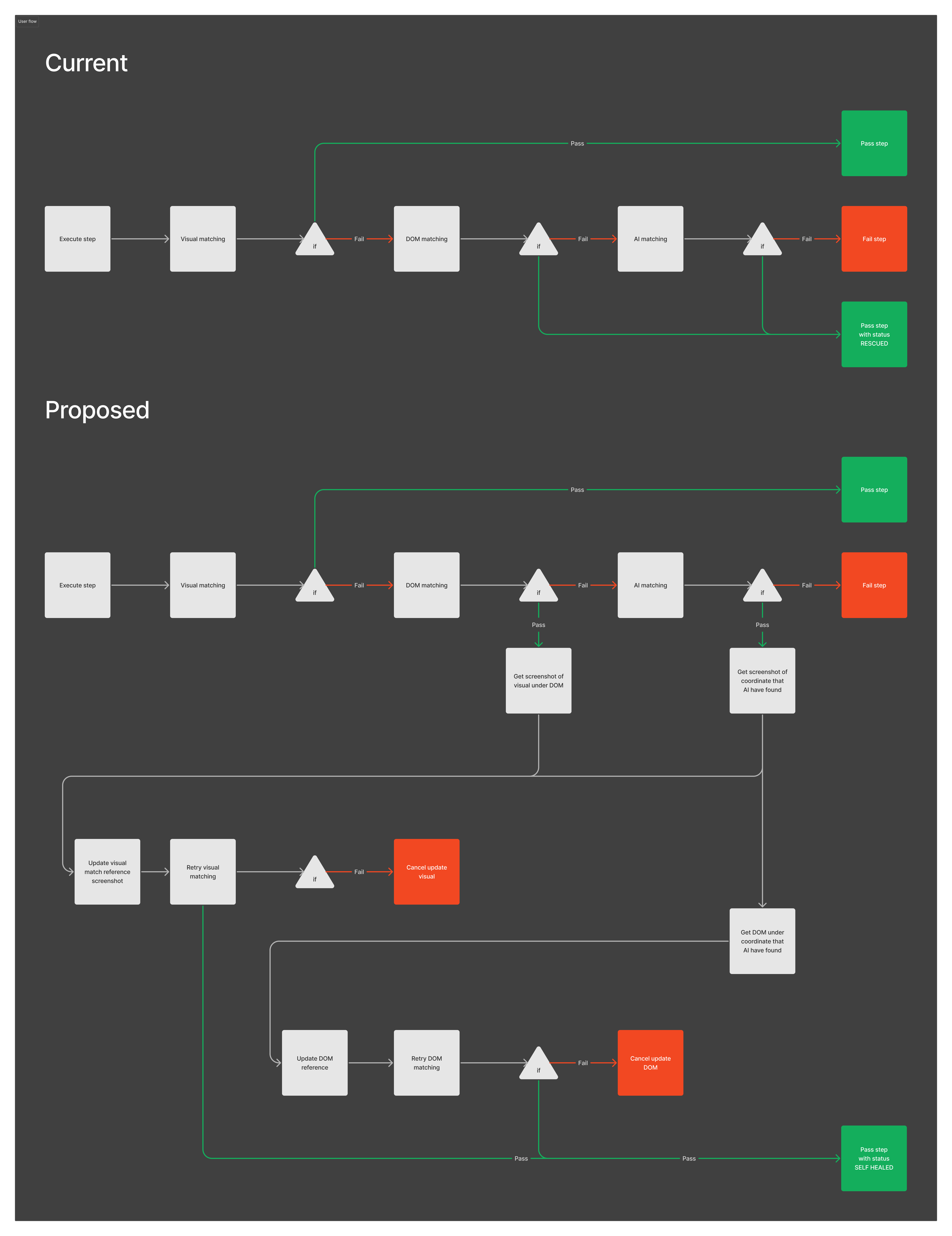

These concepts started out not with pixels, but flow diagrams that roughly demonstrate the high-level workings of the mechanism.

Why diagrams? Because it’s light on visual details that distract from the challenge we needed to overcome: is AI Block possible, and can we build it in time?

Challenge

On paper, the idea seemed very simple: input plain-English, output actions. Yet there’s an inherent challenge. When you manually pick an action, you get to decide what it is, and where it should target. But actions entered as a prompt is interpreted by AI.

The same creativity and flexibility that allows AI to interpret ‘Register with random credentials’ to mean ‘Click A, Fill B, Fill C, Fill D, Click E’, will also allow AI to interpret in all sorts of other creative and unintended ways.

Can we make it flexible, but only in ways that we want it to be flexible?

Minimum Viable Integration

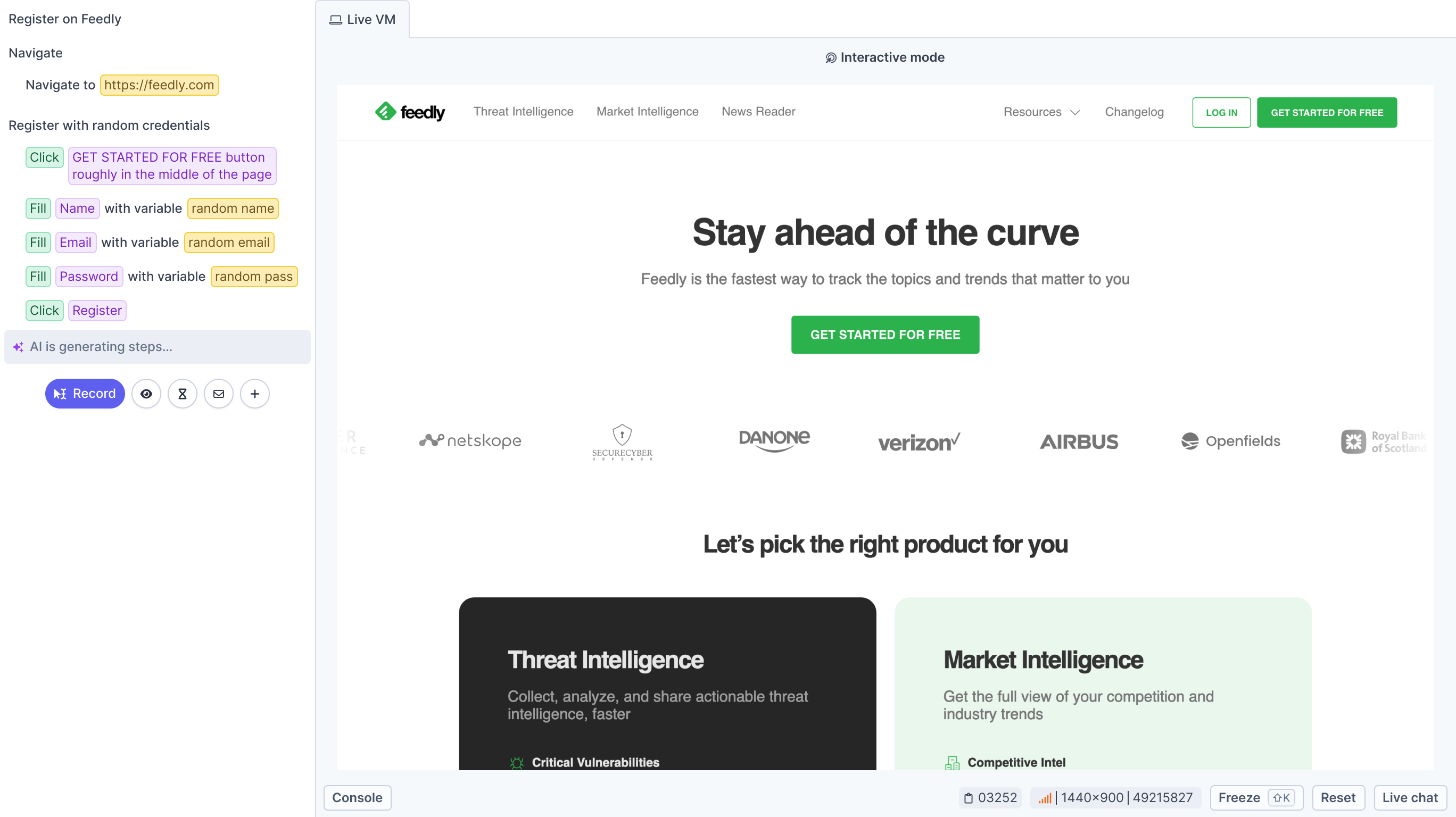

The only way to test its viability is in the real world. So we integrated AI Block into the Rainforest QA Test Editor in a very minimal way, while the user experience was still up in the air. All we knew was it needed a text input. But at which level, and how detailed?

We bootstrapped a fresh account with AI Block, brainstormed a list of common website tasks to serve as prompts, and researched well-known online shops in which to try them on.

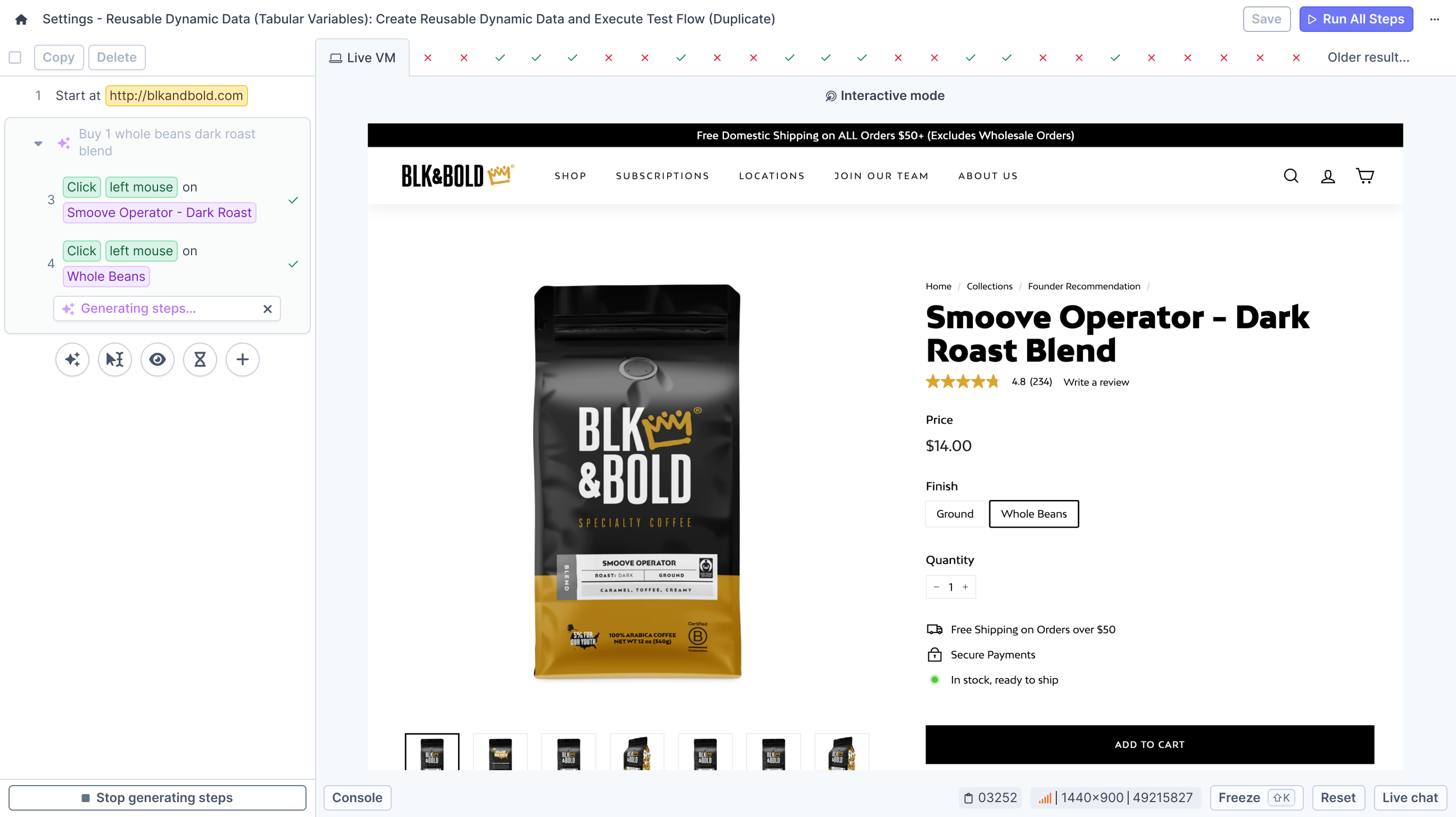

Then we went to town, started testing, and generated hundreds upon hundreds of actions. Does “Create an account” work if the link is labelled this or that? Can “Fill the shipping form with random data” be done accurately on shipping pages that look wildly different from one another?

Our initial findings were discouraging. Now only did AI generate random unrelated actions a lot of the times, sometimes it would also see something that isn’t visible on the page because it’s trying to be helpful.

Breakthrough

Modifying the original AI recipe to be longer and more accurate hit a plateau. The way our AI was initially constructed was too unreliable. Through async concept presentations and feedback, face-to-face brainstorming sessions, and technical mockups, we found at least two major improvements:

- A system of complementary agents that check each other’s work

Plan the high-level job to be done

Verify the job’s viability as an action

See the action’s element target on the page

Create the action - A way to evaluate the correctness of generated actions, measure it over time, and prevent regression. This is important because a prompt like “Buy a product on the page” can have many correct answers (e.g. the product could be a shirt, a hat, or a bag).

These AI fine-tuning, infrastructure and engineering works went side-by-side with design, allowing the product experience to morph as we learnt new things. For instance, after our team found that complementary agents worked better than a single one, I had to design ways to visualise multi-agent reasoning.

Result

By simply typing, a user can now tell Rainforest AI what they want to do – in as few or many details as they like – and have AI turn their prompt into actions and element targets.

Thanks to its directness and simplicity, Rainforest QA decided to make AI Blocks the default way to create actions. It had also helped attract attention from a few investors, and paved the way for AI Recovery Mode.