AI Recovery Mode

Problem

Tests are created to ensure that your app is working. Sometimes, tests fail because features don’t work as designed. At other times, tests fail because features have been updated but the test hasn’t.

There are many ways failure happens (those two are just the most common), but one thing is always true: to fix tests, you have to watch the video replay, determine the steps that went wrong, and fix the problem – all by yourself.

Test maintenance is manual and time-consuming. Every UI testing software in the market struggles with this problem. It’s why people don’t update their tests to match their latest app functionalities. It’s why they then risk disengaging and dropping off.

Our users are right. They’re saying “If I fix my test now, it will break again when I next update this feature. So why worry about updating the test if I can continue building features?”.

Fundamentally, time spent debugging tests is time spent not shipping software. And if we could fix it, we’ll solve the number one pain point in the software.

Design Process

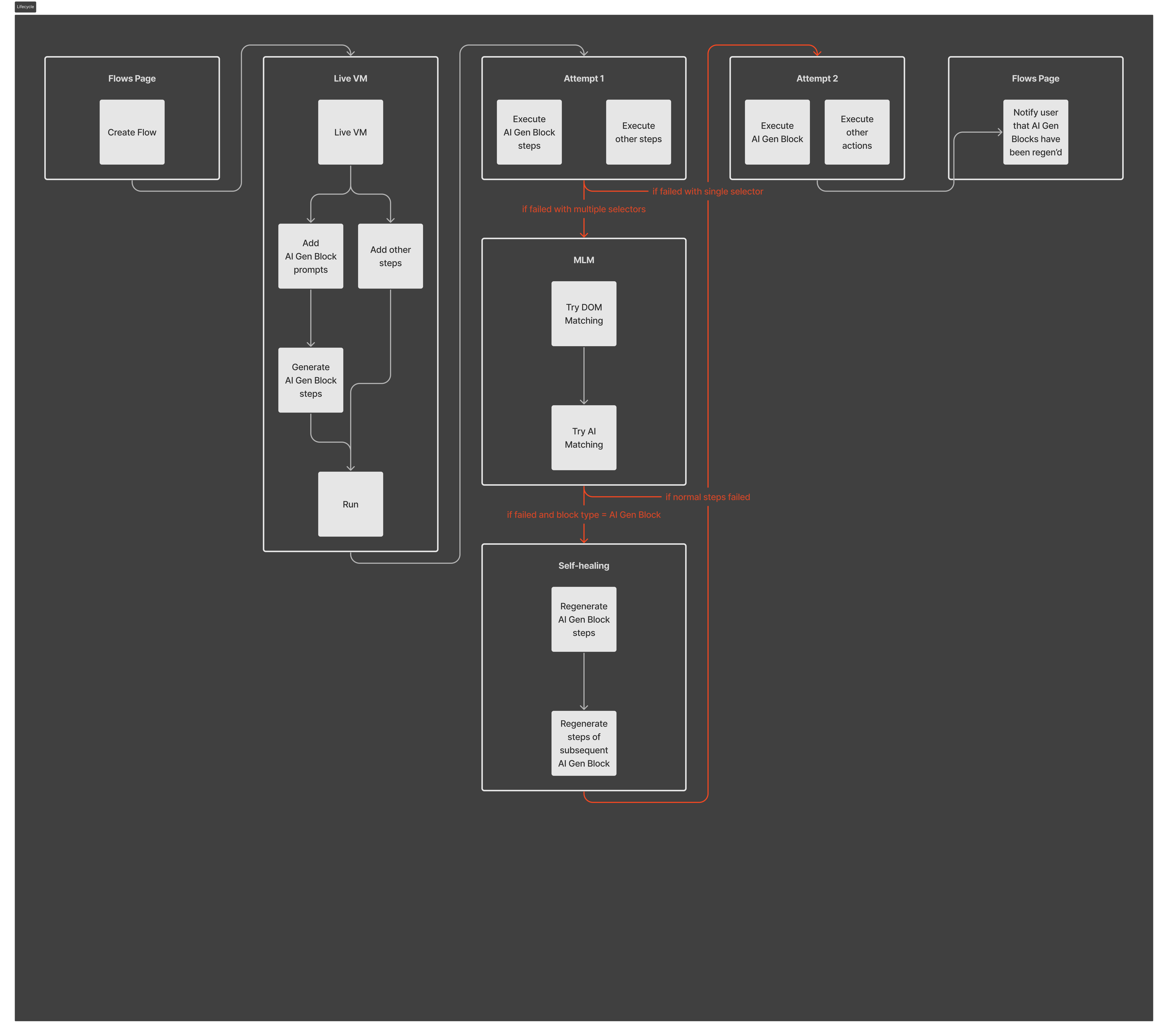

The problem was clear, but the solution was obscured. That is, until AI Block was launched and our team started asking “What if the same agents that can generate actions, can step in when those actions fail and try to re-generate actions and recover those tests?”

Thanks to a team agreement about how the high-level mechanism should work, my flow diagrams could go more detailed and lower-level. Even so, there were a few questions and challenges.

Challenges

We can unleash our complementary agents when a test fail, but how will they understand the user’s original intention?

Test failures almost always happen in the middle. If our agents step in the middle, how will it know when to stop re-generating actions? And how can it ensure that subsequent actions that were perfectly okay are not touched?

Minimum Viable Integration

We needed actual failures to see whether our AI can recover them. So we took a sample of failures from various customers, and sorted them into ways that AI could’ve fixed. We made two findings.

First, our agents would sometimes start hallucinating. They would fix failures, but often in ways that would pass situations that were supposed to fail.

For example, if the original action was to click a button called “Checkout” but it couldn’t be found on the page, a reasonable fix would be to look for the button by scrolling down. Instead, AI would sometimes append the URL with

/checkout, refresh the page, or go to Google and search “How to checkout”.Second, our agents would sometimes end up going too far after fixing.

For example, if the original instruction was to “Fill in the shipping form with sample data”, AI would sometimes submit the form, wait for the next page to load, and click the “Buy Now” button there.

These were both creative behaviours, but they weren’t quite right. If we’re going to make AI Recovery work, it needed context.

Breakthrough

To stop the agents from hallucinating, AI Block came to the rescue. It had launched for a few weeks, and our users have been actively writing prompts for it. As it turned out, when our agents was given these prompts at the point of failure, they become accurate at recovering failures – both in terms of avoiding hallucination.

To stop the agents from going too far, we had to accept that their prompt interpreting and action generation creativity were limiting its failure recovery ability. There had to be a trade-off. We couldn’t use the same agent for both activities.

Result

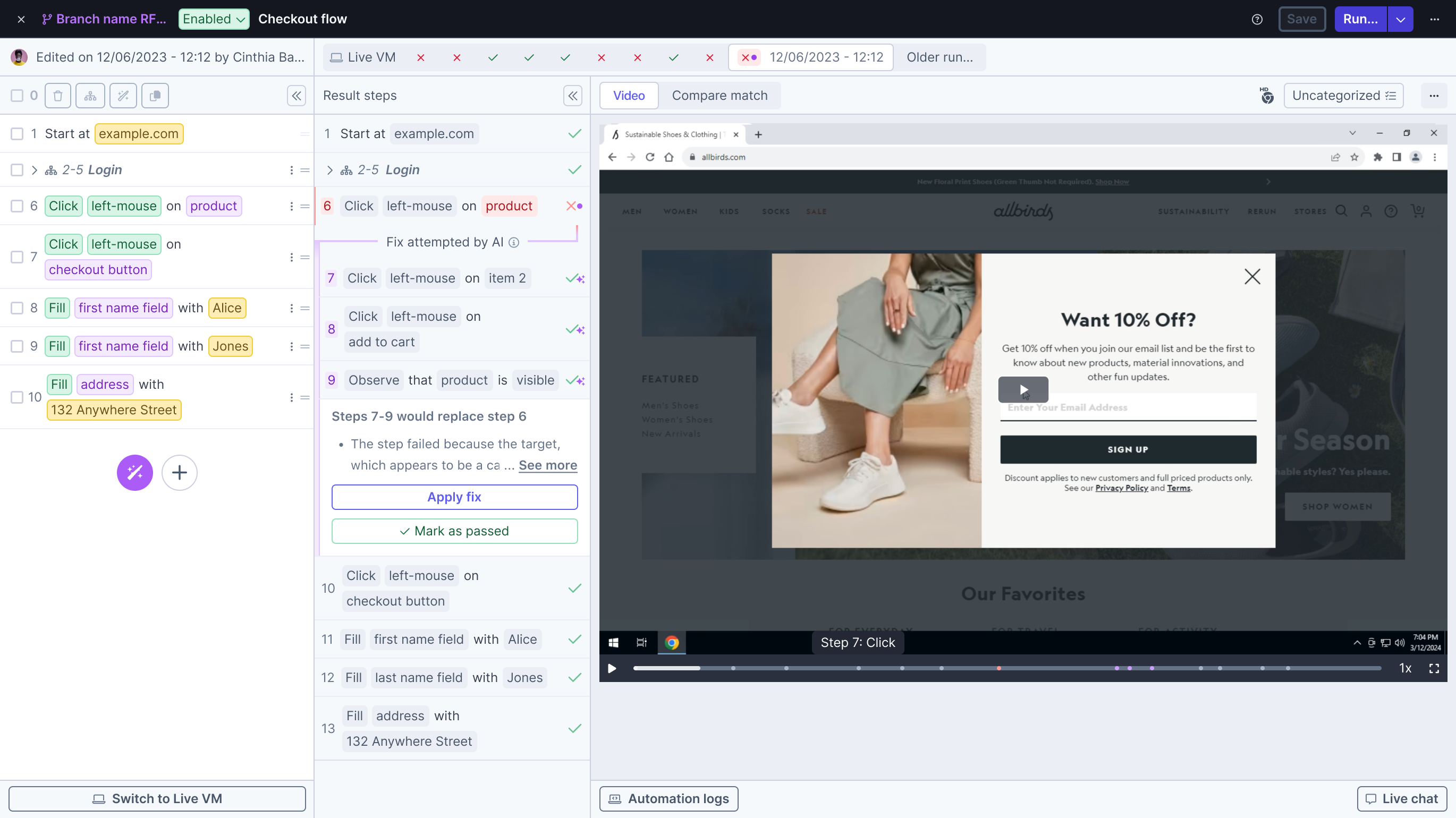

We created a separate set of agents especially suited for recovery tasks. Instead of being free to interpret instructions, they were given an explicit goal: try a set of actions that a human tester might do (like scrolling up/down or clicking on an item that conceptually means the same thing) and don’t try others (like going to a different page, modifying the URL, or using the browser interface).

And instead of delaying feature launch until we got the behaviour correct 100% of the time, we implemented policies for different types of AI Recovery:

- Failures that happened inside AI Blocks has a prompt, and AI will fix it accurately. Apply the fix to the test straight away.

- Failures that happened outside AI Blocks has no prompt. There’s a chance hallucination may occur. Ask users to review the fix before applying it.

Launching this feature meant that customers who have yet to use AI Block have a strong incentive to try and adopt it – and those who actively use AI Block are, at long last, freed from much of their test maintenance burdens.